The Haptic Affect Loop

Funding

- Nokia

- UBC Mechanical Engineering Graduate Entrance Scholarship

Principal Investigators

Collaborating Academic Organizations:

- Collaborative Advanced Robotics and Intelligent Systems Laboratory

- Sensory Perception and Interaction Research Group

Thesis

Project Description

Today, the vast majority of user interfaces in consumer electronic products are based around explicit channels of interaction with human users. Examples include keyboards, gamepads, and various visual displays. Under normal circumstances, these interfaces provide a clear and controlled interaction between user and device [1]. However, problems with this paradigm arise when these interfaces divert a significant amount of their users’ attention from more important tasks. For example, consider the situation where a person in a car is trying to adjust the stereo while driving. The driver must divert some attention away from their primary task of driving to operate the device. It can be seen the concept of explicit interaction of peripheral devices can lead to an impairment in the ability for the user to effectively perform primary tasks.

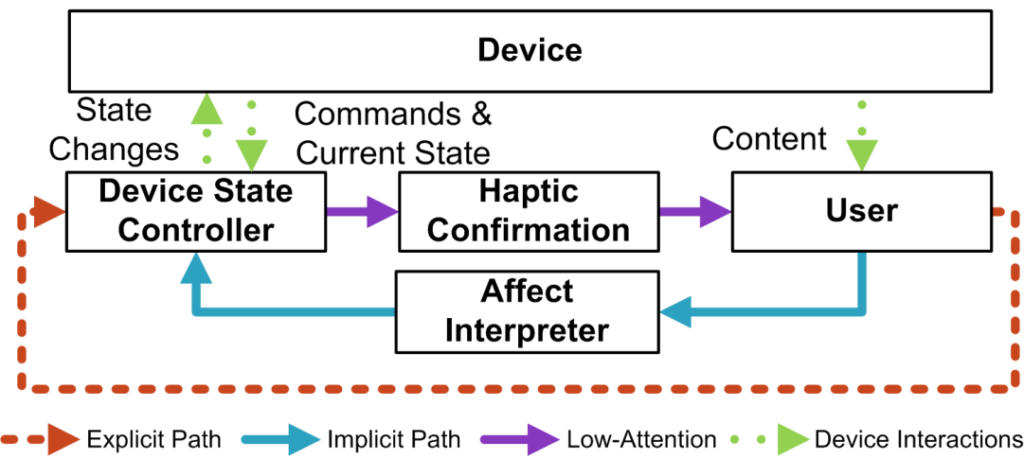

The goal of my research was to design and implement a fundamentally different approach to device interaction. Rather than relying on explicit modes of communication between a user and device, I used implicit channels instead to decrease the device’s demand on the user’s attention. It is well known that human affective (emotional) states can be characterized by psycho-physiological signals that can be measured by off-the-shelf biometric sensors [2]. We had proposed to measure user-affective response and incorporate these signals into the device’s control loop so that it will be able to recognize and respond to user affect. We had also put forward the notion that haptic stimuli through a tactile display can be used as an immediate, yet unobtrusive channel for a device to communicate to a user that it has responded to their affective state, thereby closing the feedback loop [3]. Essentially, this research defined a model for a unique user-device interface that was driven by implicit, low-attention communication. It is theorized that this new paradigm will be minimally invasive and will not require him/her to be distracted by the peripheral device. We have termed this process of affect recognition leading to changes in device behaviour which is then signalled back to the user through haptic stimuli as the Haptic-Affect Loop (HALO).

My focus within the HALO concept was on the design and analysis of the overall control loop. This required me to measure, model and optimize latency, flow and habituation between the user’s affective state and HALO’s haptic display. A related problem that I needed to address was dimensionality – what aspects of a user’s biometrics should be used to characterize affective response? For example, what combination of skin conductance, heart rate, muscle twitch etc. best indicates that a user is happy or depressed? As an extension to this problem, how can the environmental context surrounding a user be established to calibrate affect recognition – for example, jogging in the park versus working in the office? Similarly, I also needed to specify the dimensionality of the haptic channels that notifies the user of device response while maintaining the goal of not distracting the user. I need to address where (e.g. back of the neck, fingertip) and with what stimulus (i.e. soft tapping vs. aggressive buzzer) should the haptic feedback be delivered.

To validate the HALO concept, it was implemented in two use-cases – both showcasing HALO’s value in information network environments where attention is highly fragmented: the navigation of streaming media on a computer or portable device and background communication in distributed meetings. The results of the research included new lightweight affect sensing technologies, tactile displays and interaction techniques. This work complements and applies research in the areas of communications, haptics, and biometric sensing.

[1] C. D. Wickens and J. G. Hollands, Engineering Psychology and Human Performance, 3rd ed. Prentice Hall, 1999.

[2] M. Pantic and L. J. M. Rothkran, “Toward an affect-sensitive multimodal human-computer interaction,” Proceedings of the IEEE, vol. 91, no. 9, pp. 1370-1390, Sep. 2003.

[3] S. Brewster and L. M. Brown, “Tactons: structured tactile messages for non-visual information display,” Proceedings of the fifth conference on Australasian user interface, vol. 28, pp. 15-23, 2004.