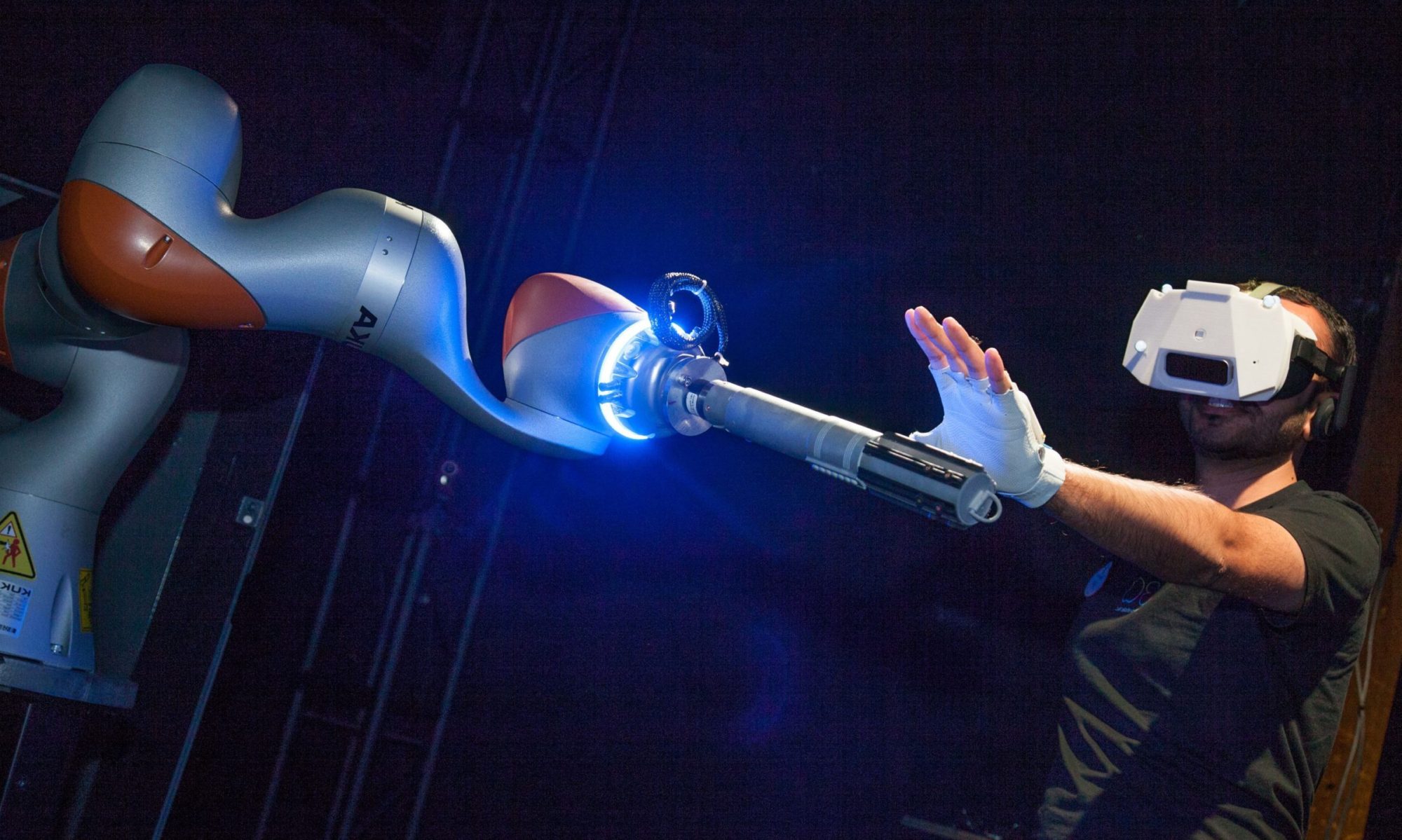

Catching a Real Ball in Virtual Reality

Description

We present a system enabling users to accurately catch a real ball while immersed in a virtual reality environment. We examine three visualizations: rendering a matching virtual ball, the predicted trajectory of the ball, and a target catching point lying on the predicted trajectory. In our demonstration system, we track the projectile motion of a ball as it is being tossed between users. Using Unscented Kalman Filtering, we generate predictive estimates of the ball’s motion as it approaches the catcher. The predictive assistance visualizations effectively increase the user’s senses but can also alter the user’s strategy in catching.

Affiliated Institution

Manuscript

Presentation Venue

IEEE Virtual Reality (VR) 2017

Los Angeles, USA

Media Coverage

Reddit, Mashable, TNW, The Verge, Engadget, Gizmodo, phys.org, Psychology Today

Additional Media

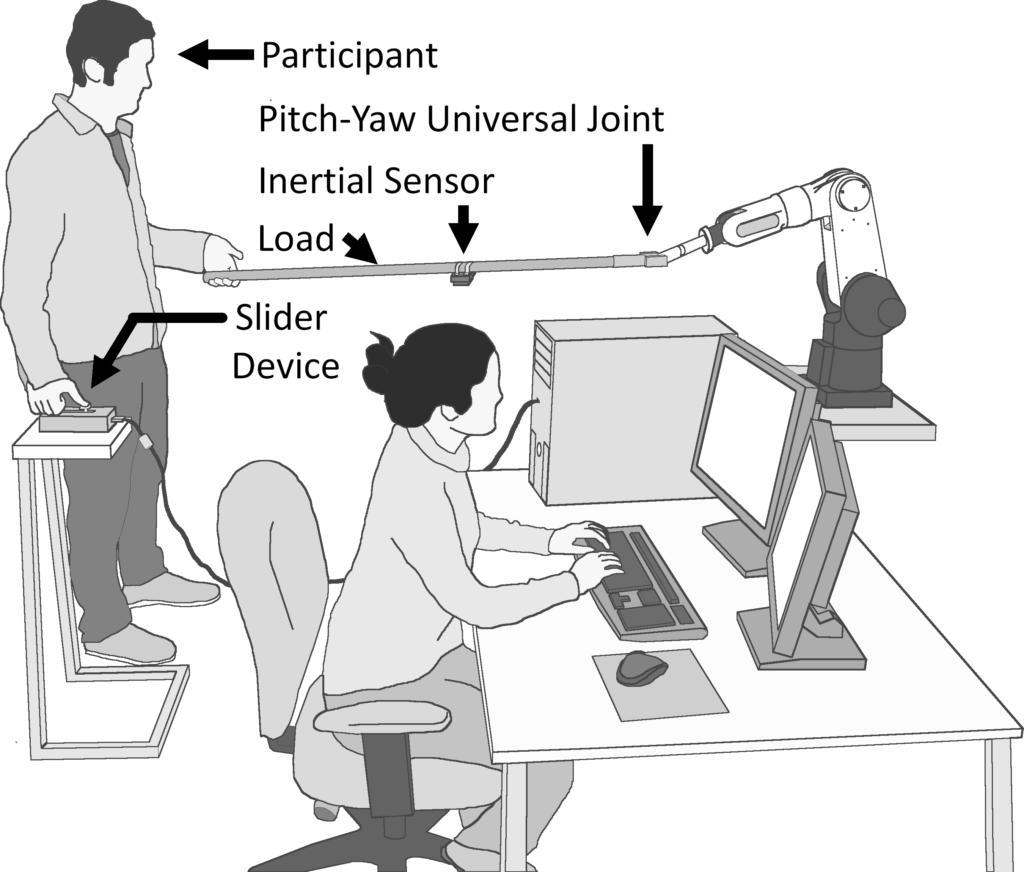

Human-Robot Collaborative Lifting

Description

Technological advances are leading towards robot assistants that are helpful and affordable. However, before such robots can be deployed widely, both design and control strategies fostering safe and intuitive interaction between robots and their non-expert human users are required. A cooperative task often identified as potential application for robot assistants is cooperative manipulations of a large/awkward object. In this work, we propose a controller for a robot assistant to help a human raise and lower a physically large object. Fifty human subjects independently participated in the tuning of our proposed controller across four studies. Results show that user preference in tuning this controller is independent of load length and can be mapped linearly across the controller’s natural frequency-damping ratio space. Additionally, we find evidence for a universal, ‘one-size-fits-most’ tuning that is preferred (or at least acceptable) to a majority of users such that customizing the controller tuning to individual users may not be necessary.

Affiliated Institutions

- Collaborative Advanced Robotics and Intelligent Systems Laboratory (The University of British Columbia)

- Institute for Computing, Information and Cognitive Systems (The University of British Columbia)

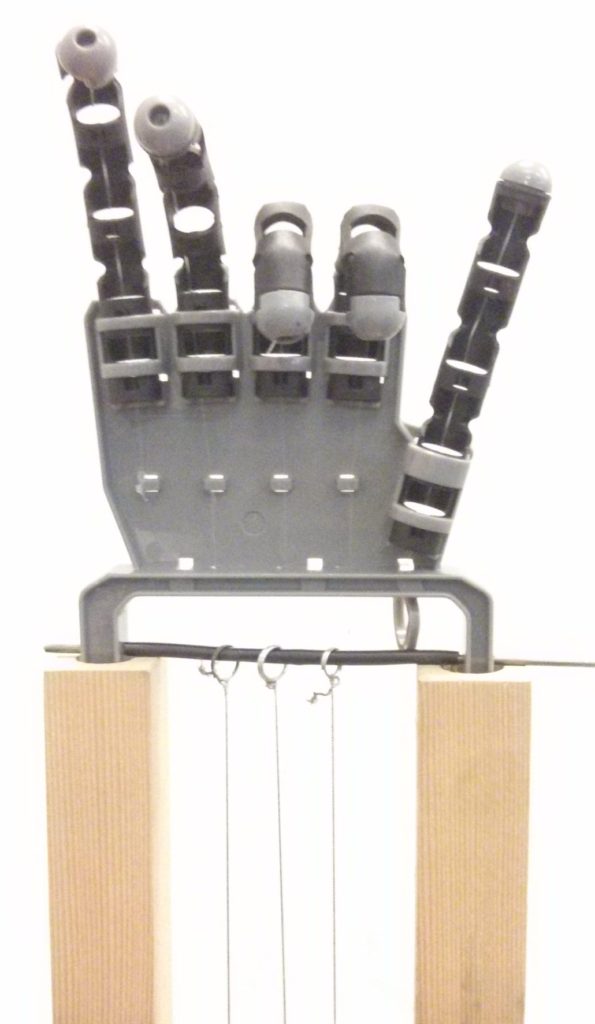

Coiled Nylon-Actuated Robot Manipulator

Description

In a joint project between the CARIS and Molecular Mechatronics labs (one of the labs which developed the coiled nylon artificial muscle actuator), we built several robotic gripper prototypes as part of a demonstration on how these nylon actuators can be used in robotic applications.

Research Institutions

- Molecular Mechatronics Lab (The University of British Columbia)

- Microsystems and Nanotechnology Group (The University of British Columbia)

- Collaborative Advanced Robotics and Intelligent Systems Laboratory (The University of British Columbia)

- Sensory Perception and Interaction Group (The University of British Columbia)

- Institute for Computing, Information and Cognitive Systems (The University of British Columbia)

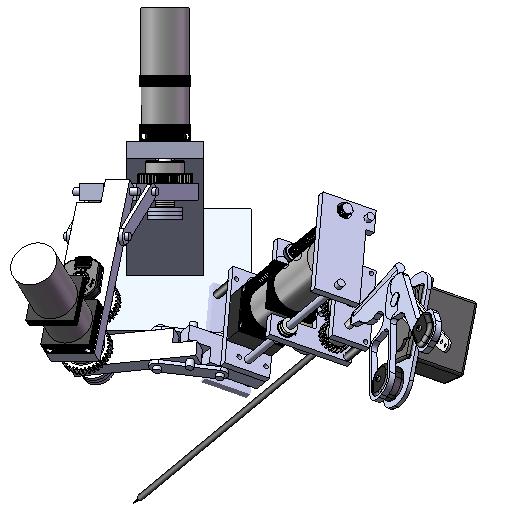

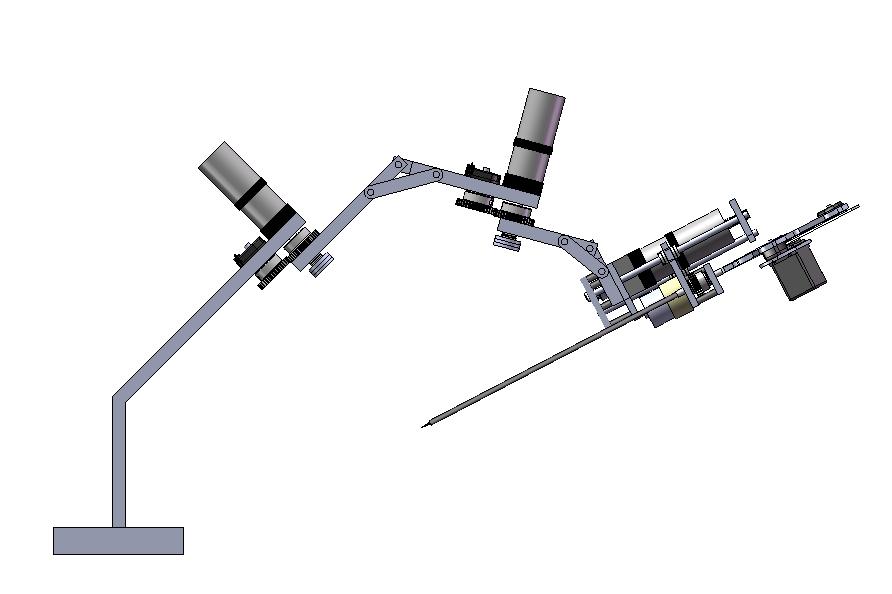

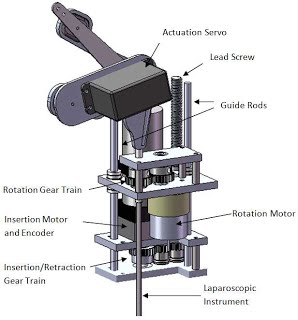

A Low-Cost Laparoscopic Robot for Image-Guided Tele-Surgery

Description

I participated in the design and construction of low-cost laparoscopic surgical robot prototype. The robot was commissioned by the Canadian Surgical Technologies and Advanced Robotics center for surgical training and research purposes. With the only FDA approved laparoscopic robot (DaVinci, Intuitive Surgical) costing $1.5 Million USD, developing prototype robotic platforms that cost much less are useful for surgical training, trying new surgical approaches, and for teleoperation research. Features of the developed system included:

- 5 Degree-of-Freedom mechanical design enforcing a remote center of rotation

- Counter-weighted arms for motor efficiency

- controllable by multiple types of slave devices (e.g., the Novint Falcon)

- Tool actuation mechanism that could grasp and operate conventional laparoscopic tools interchangeably

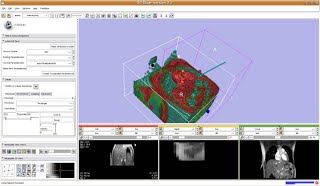

- Image-guidance and 3D visualization of the workspace through the open-source 3DSlicer

Affiliated Institutions

- University of Waterloo

- Western University

- Robarts Research Institute

- Canadian Surgical Technologies and Advanced Robotics (London Health Sciences Centre)

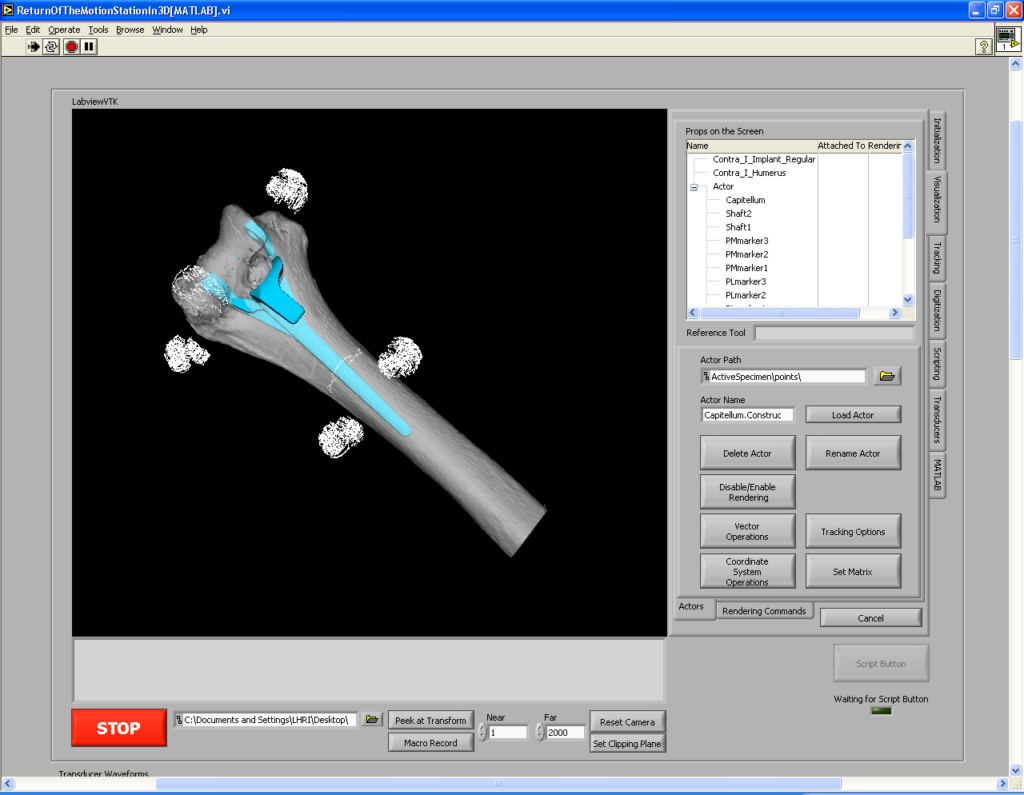

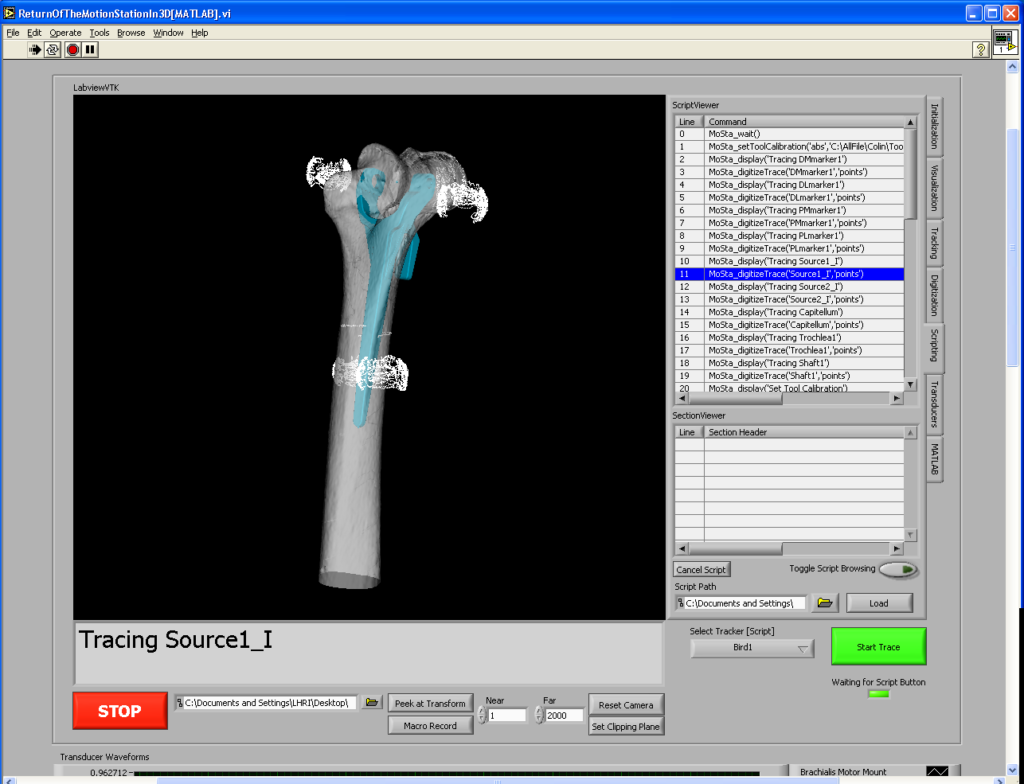

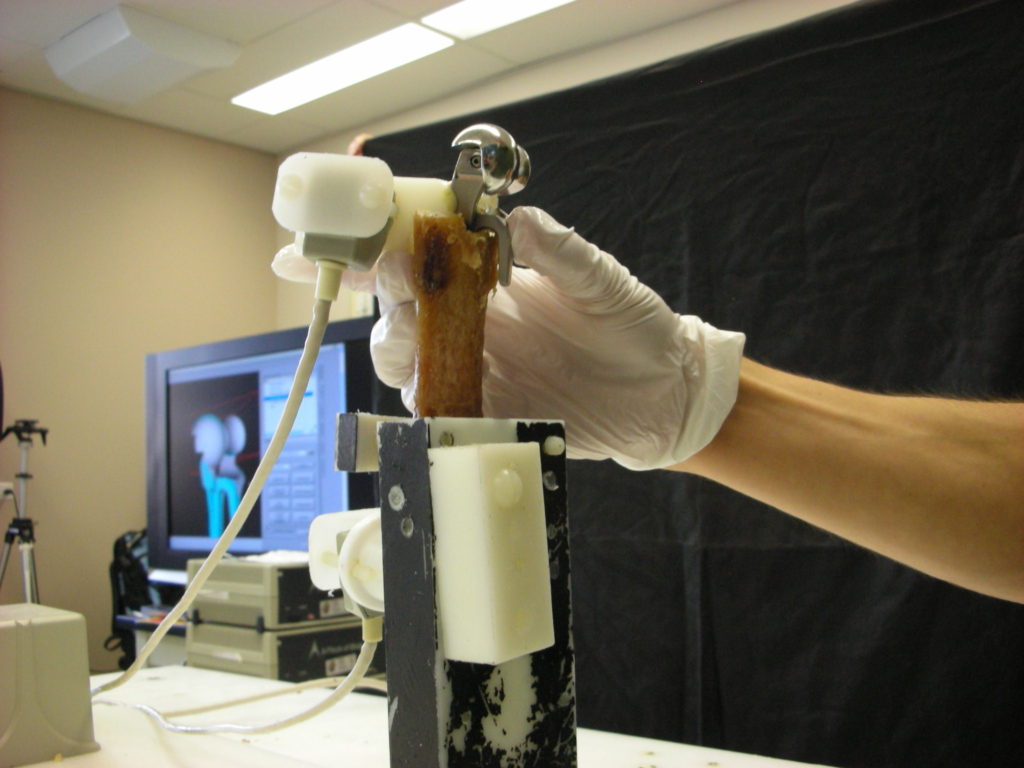

3D Visualization for Orthopedic Surgical Intervention

Description

I was involved in the development of a software program called MotionStation3D to assist researchers and surgeons in their computer-assisted surgery and joint kinematics studies. MotionStation3D is built upon the National Instrument’s LabView software development environment and is designed to record and process motion data from multiple sensing instruments, display 3D spatial data, and send commands to actuate simulator hardware. Integration of the Visualization Toolkit (VTK) – an open-source, freely available 3D computer graphics package – allows MotionStation3D to represent structures in real 3D space and provides a powerful engine for assisting motion, implantation and surgical procedures. MotionStation3D can:

- Represent specimen and testing apparatus using 3D models from CT scans and/or 3D models in real time

- Simulate motion of a tool/specimen in a virtual environment with the use of 3D trackers (e.g., Flock of Birds and Optotrak); and

- Provide an interfacing between trackers and specimen in the virtual VTK 3D world.